Data movement is the beating heart of modern data infrastructure. It facilitates seamless information flow across systems to power customer interactions, AI, analytics, and so much more. Powered by Flink and Debezium, Decodable handles both ETL and ELT workflows, eliminating the need for teams to compromise between data processing and movement. Beyond providing a core data infrastructure platform, Decodable addresses the challenge of data movement and stream processing pragmatically, reimagining it in a way that is unified, real-time, and cost efficient. By simplifying the most formidable data infrastructure challenge, Decodable enables teams to focus on their core strengths: innovation and delivering value.

One of the most common data movement use cases is replicating a database to durable, highly-available storage service to support a wide range of applications. In this guide, we’ll look at moving data from PostgreSQL to Amazon S3. PostgreSQL (or more simply Postgres) is one of the most popular online transaction processing (OLTP) database systems. OLTP workloads are optimized for database inserts and storage, with a wide variety of applications including banking, online shopping, and system logging. Similarly, S3 is among the most popular object storage services. Storage systems are optimized for the retention of massive amounts of unstructured data, and are typically used as data lakes and data warehouses to support business applications, photo and video archiving, websites, and more.

Postgres Overview

PostgreSQL is a powerful, open source object-relational database system that uses and extends the SQL language combined with many features that safely store and scale the most complicated data workloads. The origins of PostgreSQL date back to 1986 as part of the POSTGRES project at the University of California at Berkeley and has more than 30 years of active development on the core platform.

PostgreSQL has earned a strong reputation for its proven architecture, reliability, data integrity, robust feature set, extensibility, and the dedication of the open source community behind the software to consistently deliver performant and innovative solutions. PostgreSQL runs on all major operating systems, has been ACID-compliant since 2001, and has powerful add-ons such as the popular PostGIS geospatial database extender. These days, Postgres is available as a managed database in the cloud from all of the major cloud providers, as well as a number of independent companies. It is no surprise that PostgreSQL has become the open source relational database of choice for many people and organizations.

The traditional approach to syncing PostgreSQL with complementary data stores is batch-based, where pipelines extract data from PostgreSQL and send it to downstream data stores. Change data capture (CDC) is a modern alternative to inefficient bulk imports. CDC extracts change events (INSERTs, UPDATEs, and DELETEs) in real-time from data stores, such as PostgreSQL, and provides them to downstream systems which can be applied to a copy of the data, as we’ll show here.

Amazon S3 Overview

S3 is a fully managed software-as-a-service (SaaS) that provides a single platform for data warehousing, data lakes, data engineering, data science, data application development, and secure sharing and consumption of real-time/shared data. S3 features out-of-the-box features like separation of storage and compute, on-the-fly scalable compute, data sharing, data cloning, and third-party tools support in order to handle the demanding needs of growing enterprises.

The S3 data platform is not built on any existing database technology or “big data” software platforms such as Hadoop. Instead, S3 combines a completely new SQL query engine with an innovative architecture natively designed for the cloud. To the user, S3 provides all the functionality of an enterprise analytic database, along with many additional special features and unique capabilities. S3 runs completely on cloud infrastructure. All components of S3’s service (other than optional command line clients, drivers, and connectors), run in public cloud infrastructures. S3 uses virtual compute instances for its compute needs and a storage service for persistent storage of data. S3 cannot be run on private cloud infrastructures (on-premises or hosted) as it is not a packaged software offering that can be installed by a user. S3 manages all aspects of software installation and updates.

Prerequisites

Using PostgreSQL with Decodable

Before you can get data from PostgreSQL, the following requirements must be met:

- Your PostgreSQL database must be accessible from the Decodable network.

- Your Postgres database must have the logical decoding feature enabled, which provides the ability to stream data changes to external consumers.

- Your PostgreSQL database user must have sufficient permissions to create PostgreSQL publications.

Using Amazon S3 with Decodable

Before you send data from Decodable into S3, you must have an Identity and Access Management (IAM) role with the following policies (see the Setting up an IAM User section for more information):

- A Trust Policy that allows access from Decodable’s AWS account. The <span class="inline-code">ExternalId</span> must match your Decodable account name.

- A Permissions Policy with read and write permissions for the destination bucket.

Create Connectors

Follow the steps in the sections below to get data from PostgreSQL into Decodable, optionally transform it, and then from Decodable to S3. These steps assume that you are using the Decodable web interface. However, if you want to use the Decodable CLI to create the connection, you can refer to the Decodable documentation for PostgreSQL and Amazon S3 for information about what the required property names are.

Create a PostgreSQL Source Connector

- From the Connections page, select PostgreSQL CDC and complete the required fields.

- Select the stream that you’d like to connect to this connector. Then, select Next.

- Define the connection’s schema. Select New Schema to manually enter the fields and field types present or Import Schema if you want to paste the schema in Avro or JSON format.

<ol type="a"><li>The stream's schema must match the schema of the data that you plan on sending through this connection.</li><li>You must designate one or more fields to use as a primary key. A primary key is a field that contains a value that can be used to uniquely identify each row in a table. To specify a primary key, you must first explicitly tell Decodable that the type is not null.</li></ol> - Select Next when you are finished providing defining the connection’s schema.

- Give the newly created connection a Name and Description and select Save.

- Start your connection to begin processing data from PostgreSQL.

Create an Amazon S3 Sink Connector

- From the Connections page, select the Amazon S3 connector and complete the required fields.

- Select which stream contains the records that you’d like to send to Amazon S3. Then, select Next.

- Give the newly created connection a Name and Description and select Save.

- If you are replacing an existing Amazon S3 connection, then restart any pipelines that were processing data for the previous connection.

- Finally, Start your connection to begin ingesting data.

Additional information is available in the Decodable documentation to help with:

- Setting up an IAM User

- JSON Format Properties

- Parquet Format Properties

- S3 Object Key Partitioning

- S3 Object Key Formation

At this point, you have data streaming in real-time from PostgreSQL to S3!

Processing Data In Real-time With Pipelines

A pipeline is a set of data processing instructions written in SQL or expressed as an Apache Flink job. Pipelines can perform a range of processing including simple filtering and column selection, joins for data enrichment, time-based aggregations, and even pattern detection. When you create a pipeline, you define what data to process, how to process it, and where to send that data to in either a SQL query or a JVM-based programming language of your choosing such as Java or Scala. Any data transformation that the Decodable platform performs happens in a pipeline. To configure Decodable to transform streaming data, you can insert a pipeline between streams. As we saw when creating an S3 connector above, pipelines aren’t required simply to move or replicate data in real-time.

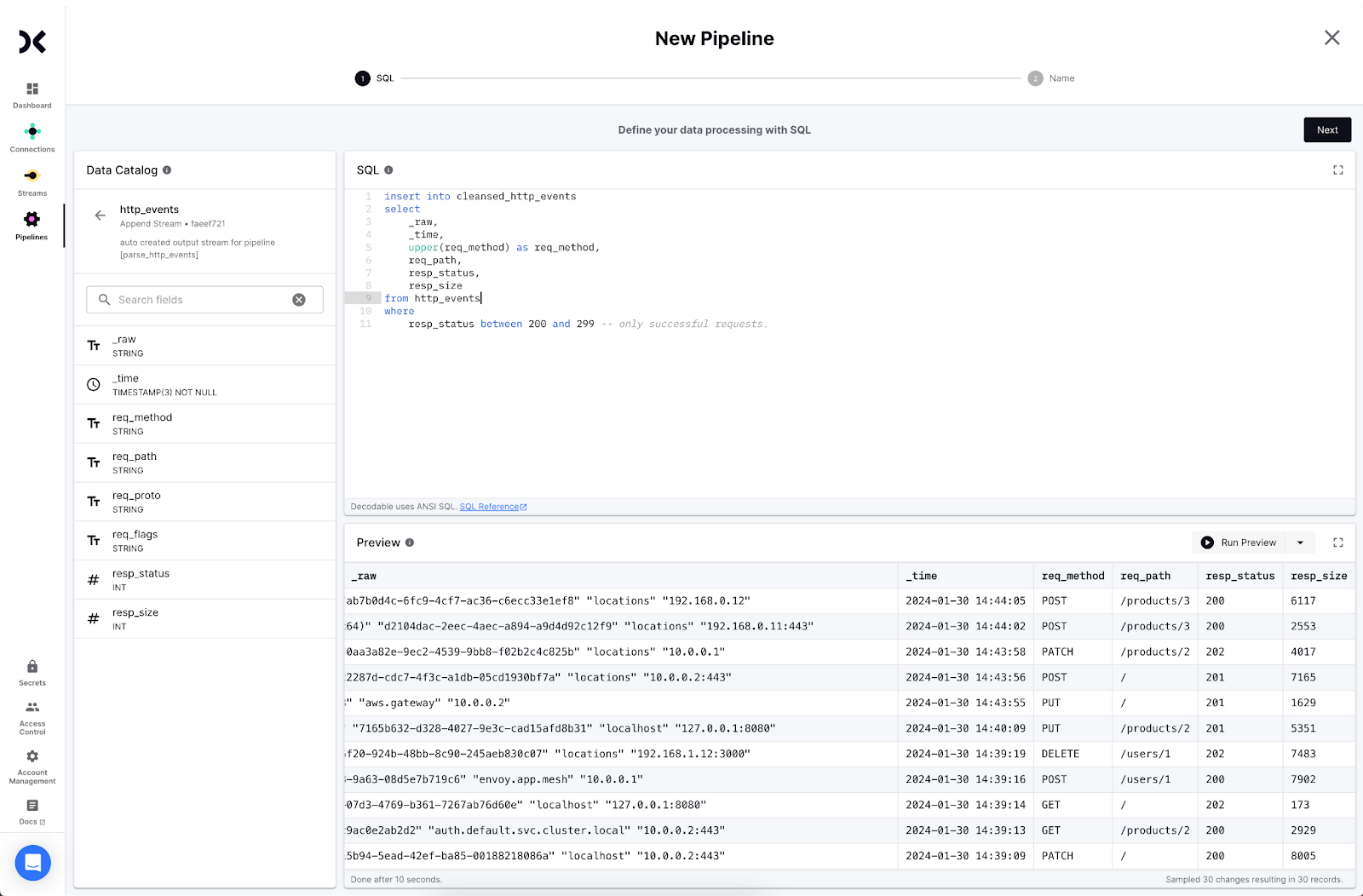

Create a Pipeline Between the PostgreSQL and S3 Streams

As an example, you can use a SQL query to cleanse the PostgreSQL data to meet specific compliance requirements or other business needs when it lands in S3. Perform the following steps:

- Create a new Pipeline.

- Select the stream from PostgreSQL as the input stream and click Next.

- Write a SQL statement to transform the data. Use the form: <span class="inline-code">insert into <output> select … from <input></span>. Click Next.

- Decodable will create a new stream for the cleansed data. Click Create and Next to proceed.

- Provide a name and description for your pipeline and click Next.

- Start the pipeline to begin processing data.

The new output stream from the pipeline can be written to S3 instead of the original stream from PostgreSQL. You’re now streaming transformed data into S3 from PostgreSQL in real-time.

Conclusion

Replicating data from OLTP databases like PostgreSQL to S3 in real-time helps you to manage storage costs, meet regulatory requirements, reduce latency, and save multiple distinct copies of your data for compliance requirements. It’s equally simple to cleanse data in flight so it’s useful as soon as it lands. In addition to reducing latency to data availability, this frees up data warehouse resources to focus on critical analytics, ML, and AI use cases.

.svg)

.svg)